The AAPB started creating transcripts as part of our “Improving Access to Time-Based Media through Crowdsourcing and Machine-Learning” grant from the Institute of Museum and Library Services (IMLS). For the initial 40,000 hours of the AAPB’s collection, we worked with Pop Up Archive to create machine-generated transcripts, which are primarily used for keyword indexing, to help users find otherwise under-described content. These transcripts are also being corrected through our crowdsourcing platforms FIX IT and FIX IT+.

As the AAPB continues to grow its collection, we have added transcript creation to our standard acquisitions workflow. Now, when the first steps of acquisition are done, i.e., metadata has been mapped and all of the files have been verified and ingested, the media is passed in to the transcription pipeline. The proxy media files are either copied directly off the original drive or pulled down from Sony Ci, the cloud-based storage system that serves americanarchive.org’s video and audio files. These are copied into a folder on the WGBH Archives’ server, and then they wait for an available computer running transcription software.

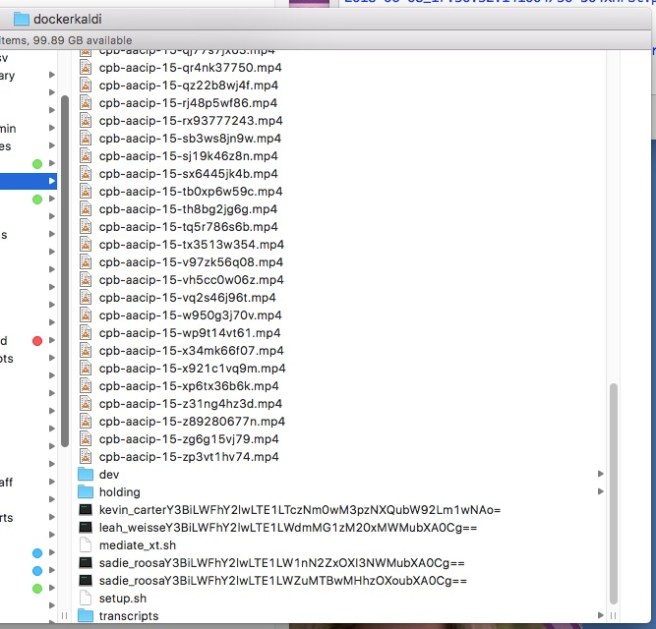

Dockerized Kaldi

The AAPB uses the docker image of PopUp Archive’s Kaldi running on many machines across WGBH’s Media Library and Archives. Rather than paying additional money to run this in the cloud or on a super computer, we decided to take advantage of the resources we already had sitting in our department. AAPB and Archives staff at WGBH that regularly leave their computers in the office overnight are good candidates for being part of the transcription team. All they have to do is follow instructions on the internal wiki to install Docker and a simple Macintosh application, built in-house, that runs scripts in the background and reports progress to the user. The application manages launching Docker, pulling the Kaldi image (or checking that you already have it pulled), and launching the image. The user doesn’t need any specific knowledge about how Docker images work to run the application. That app gets minimized on the dock and continues to run in the background as the staff members goes about their work during the day.* But that’s not all! When they leave for the night and their computer typically wouldn’t be doing anything, it continues to transcribe media files, making use of processing power that we were already paying for but hadn’t been utilizing.

*There have been reports of systems being perceptively slower when running this Docker image throughout the day. It has yet to have a significant impact on any staff member’s ability to do their job.

Centralized Solution

Now, we could just have multiple machines running Kaldi through Docker and that would let us create a lot of transcripts. However, it would be cumbersome and time-consuming to split the files into batches, manage starting a different batch on each computer, and collect the disparate output files from various machines at the end of the process. So we developed a centralized way of handling the input and output of each instance of Kaldi running on a separate machine.

That same Macintosh application that manages running the Kaldi Docker image also manages files in a network-shared folder on the Archives server. When a user launches the application, it checks that specific folder on the server for media files. If there are any media files in that folder, it takes the oldest file, copies it locally and starts transcribing it. When Kaldi has finished transcribing it, the output text and json formatted transcripts are copied to a subfolder on the Archives server, and the copy of the media file is deleted. Then the application checks the folder again, picks up the next media file, and the process continues.

Avoiding Duplicate Effort

Now, since we have multiple computers running in parallel, all looking at the same folder on the server, how do we make sure that multiple computers aren’t duplicating efforts by transcribing the same file? Well, the process first tries to rename the file to be processed, using the person’s name and a base-64 encoding of the original filename. If the renaming succeeds, the file is copied into the Docker container for local processing, and the process on every other workstation will ignore files named that way in their quest to pick up the oldest qualifying file. After a file is successfully processed by Kaldi, it is then deleted, so no one else can pick it up. When Kaldi fails on a file, then the file on the server is renamed to its original file name with “_failed” appended, and again the scripts know to ignore the file. A human can later go in to see if any files have failed and investigate why. (It is rare for Kaldi to fail on an AAPB media file, so this is not part of the workflow we felt we needed to automate further).

Handling Computer and Human Errors

The centralized workflow relies on the idea that the application is not quitting in the middle of a transcription. If someone shuts their laptop, the application will stop, but when they open it again, the application will pickup right where it left off. It will even continue transcribing the current file if the computer is not connected to the WGBH network, because it maintains a local copy of the file that is processing. This allows a little flexibility in terms of staff taking their computers home or to conferences.

The problem starts when the application quits, which could occur when someone quits it intentionally, someone accidentally hits the quit button rather than the minimize button, someone shuts down or restarts their computer, or a computer fails and shuts itself down automatically. We have built the application to minimize the effects of this problem. When the application is restarted it will just pick up the next available file and keep going as if nothing happened. The only reason this is a problem at all is because the file they were in the middle of working on is still sitting on the Archives server, renamed, so another computer will not pick it up.

We consider these few downsides to this set up completely manageable:

- At regular intervals a human must look into the folder on the server to check that a file hasn’t been sitting renamed for a long time. These are easy to spot because there will be two renamed files with the same person’s name. The older of these two files is the one that was started and never finished. The filename can be changed to its original name by decoding the base-64 string. Once the name is changed, another computer will pick up the file and start transcribing.

- Because the file stopped being transcribed in the middle of the process, the processing time spent on that interrupted transcription is wasted. The next computer to start transcribing this file will start again at the beginning of the process.

Managing Prioritization

Because the AAPB has a busy acquisitions workflow, we wanted to make sure there was a way to manage prioritization of the media getting transcribed. Prioritization can be determined by many variables, including project timelines, user interest, and grant deadlines. Rather than spending a lot of time to build a system that let us track each file’s prioritization ranking, we opted for a simpler, more manual operation. While it does require human intervention, the time commitment is minimal.

As described above, the local desktop applications only look in one folder on the Archives server. By controlling what is copied into that folder, it is easy to control what files get transcribed next. The default is for a computer to pick up the oldest file in the folder. If you have a set of more recent files that you want transcribed before the rest of the files, all you have to do is remove any older files from that folder. You can easily put them in another folder, so that when the prioritized files are completed, it’s easy to move the rest of the files into the main folder.

For smaller sets of files that need to be transcribed, we can also have someone who is not running the application standup an instance of dockerized Kaldi and run the media through it locally. Their machine won’t be tied into the folder on the server, so they will only process those prioritized files they feed Kaldi locally.

Transforming the Output

At any point we can go to the Archives server and grab the transcripts that have been created so far. These transcripts are output as text files and as JSON files which pair time-stamp data with each word. However, the AAPB prefers JSON transcripts that are time-stamped at each 5-7 second phrase.

We use a script that parses the word-stamped JSON files and outputs phrase-stamped JSON files.

Word time-stamped JSON

Phrase time-stamped JSON

Once we have the transcripts in the preferred AAPB format, we can use them to make our collections more discoverable and share them with our users. More on the part of the workflow in Part 2 (coming soon!).